第一步要先將資料型態從 12828 轉換成 1*784:

轉換是為了配合全連接神經網路(FCN)輸入層的要求,並進行影像正規化,全部除以255。

x_Train=x_train_image.reshape(60000,784).astype('float32')

x_Test=x_test_image.reshape(10000,784).astype('float32')

x_Train_normalize=x_Train/255

x_Test_normalize=x_Test/255

y_TrainOneHot=np_utils.to_categorical(y_train_label)

y_TestOneHot=np_utils.to_categorical(y_test_label)

第二步建立多元感知模型:

from keras.models import Sequential

from keras.layers import Dense

model = Sequential()

model.add(Dense(units=256,input_dim=784,kernel_initializer='normal',activation='relu'))

model.add(Dense(units=10,kernel_initializer='normal',activation='softmax'))

print(model.summary())

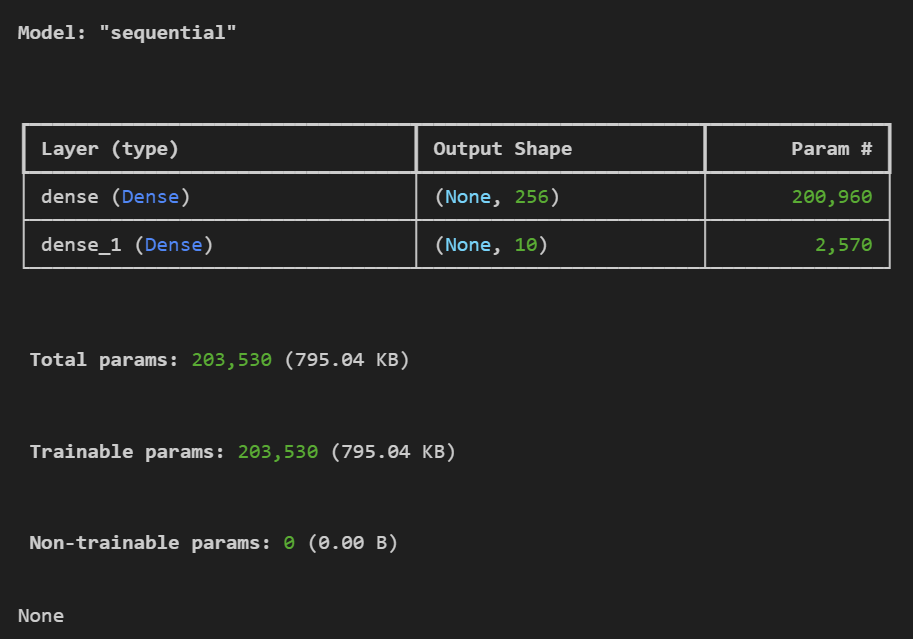

以上是輸出結果,可以看出這是一個兩層的模型,隱藏層有256個神經元,輸出層有10個神經元。

另外是 param 參數,關於計算方式第一個是 200960=256784+256,另外一個是2570=25610+10=2570。

下面則是全部訓練 total params=200960+2570=203530。

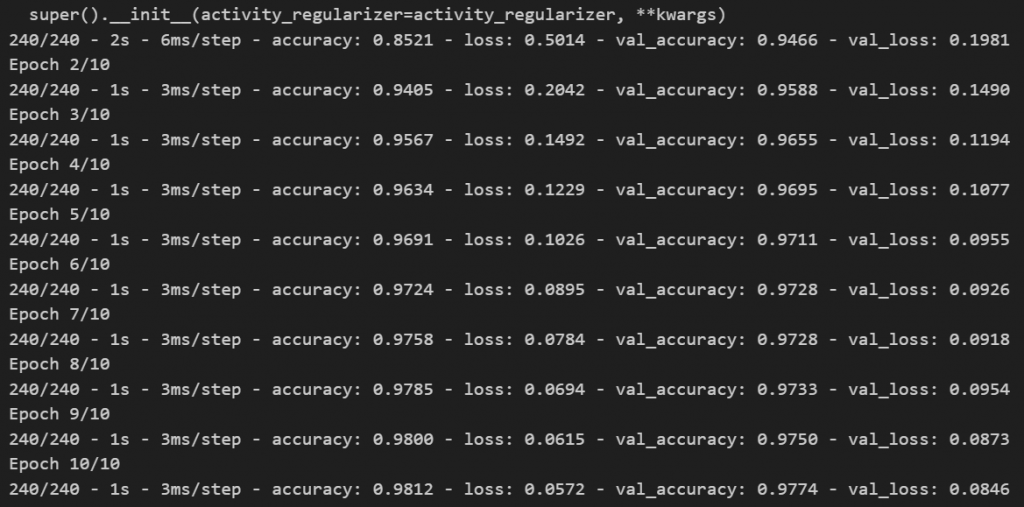

第三步就開始訓練了:

model.compile(loss='categorical_crossentropy',optimizer='adam',metrics=['accuracy'])

train_history=model.fit(x=x_Train_normalize,y=y_TrainOneHot,

validation_split=0.2,epochs=10,batch_size=200,verbose=2)

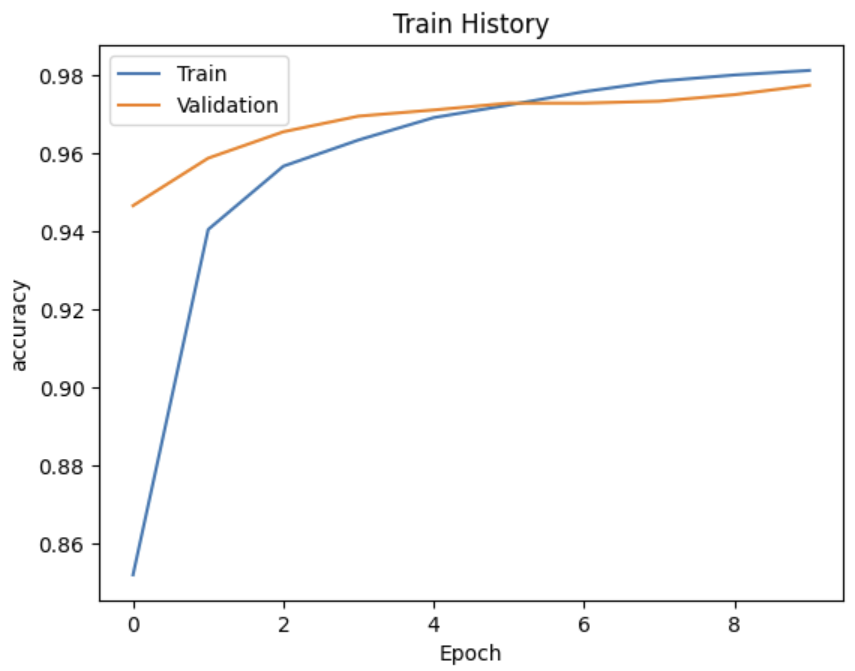

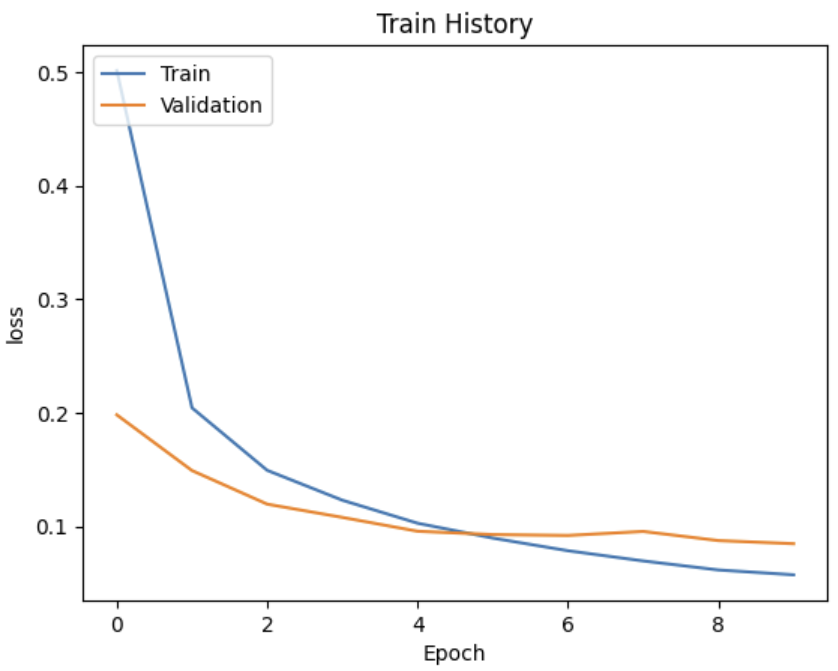

第四步將訓練過程繪製成圖輸出:

def show_train_history(train_history, metric, val_metric):

plt.plot(train_history.history[metric])

plt.plot(train_history.history[val_metric])

plt.title('Train History')

plt.ylabel(metric)

plt.xlabel('Epoch')

plt.legend(['Train', 'Validation'], loc='upper left')

plt.show()

show_train_history(train_history, 'accuracy', 'val_accuracy')

show_train_history(train_history, 'loss', 'val_loss')

最後附上完整連接再一起的程式碼:

import numpy as np

import tensorflow as tf

from tensorflow.keras import layers, models

from tensorflow.keras.datasets import mnist

import matplotlib.pyplot as plt

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train = x_train.astype('float32') / 255.0

x_test = x_test.astype('float32') / 255.0

x_train = x_train.reshape(-1, 28 * 28)

x_test = x_test.reshape(-1, 28 * 28)

y_train_one_hot = tf.keras.utils.to_categorical(y_train, num_classes=10)

y_test_one_hot = tf.keras.utils.to_categorical(y_test, num_classes=10)

model = models.Sequential([

layers.Dense(128, activation='relu', input_shape=(784,)),

layers.Dropout(0.2),

layers.Dense(64, activation='relu'),

layers.Dense(10, activation='softmax')

])

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

train_history = model.fit(x=x_train, y=y_train_one_hot, validation_split=0.2, epochs=10, batch_size=200, verbose=2)

def show_train_history(train_history, metric, val_metric):

plt.plot(train_history.history[metric])

plt.plot(train_history.history[val_metric])

plt.title('Train History')

plt.ylabel(metric)

plt.xlabel('Epoch')

plt.legend(['Train', 'Validation'], loc='upper left')

plt.show()

show_train_history(train_history, 'accuracy', 'val_accuracy')

show_train_history(train_history, 'loss', 'val_loss')